Read Large Binary File in Chunks Scipy Complex

Optimized ways to Read Large CSVs in Python

Hola! 🙋

In the electric current time, data plays a very important role in the analysis and building ML/AI model. Information can be found in various formats of CSVs, flat files, JSON, etc which when in huge makes it difficult to read into the memory. This weblog revolves around handling tabular data in CSV format which are comma split up files.

Problem: Importing (reading) a large CSV file leads Out of Memory error. Non plenty RAM to read the entire CSV at one time crashes the calculator.

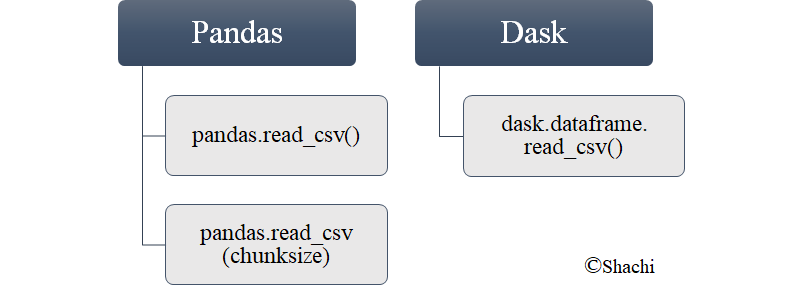

Here's some efficient ways of importing CSV in Python.

Now what? Well, let'due south ready a dataset that should be huge in size so compare the performance(time) implementing the options shown in Figure1.

Let's start..🏃

Create a dataframe of fifteen columns and 10 1000000 rows with random numbers and strings. Export it to CSV format which comes around ~i GB in size.

df = pd.DataFrame(data=np.random.randint(99999, 99999999, size=(10000000,xiv)),columns=['C1','C2','C3','C4','C5','C6','C7','C8','C9','C10','C11','C12','C13','C14']) df['C15'] = pd.util.testing.rands_array(five,10000000)

df.to_csv("huge_data.csv")

Allow's look over the importing options now and compare the fourth dimension taken to read CSV into retention.

PANDAS

The pandas python library provides read_csv() office to import CSV as a dataframe structure to compute or analyze it easily. This role provides one parameter described in a later department to import your gigantic file much faster.

i. pandas.read_csv()

Input : Read CSV file

Output : pandas dataframe

pandas.read_csv() loads the whole CSV file at one time in the memory in a single dataframe.

start = time.fourth dimension()

df = pd.read_csv('huge_data.csv')

terminate = fourth dimension.time()

print("Read csv without chunks: ",(end-showtime),"sec") Read csv without chunks: 26.88872528076172 sec

This sometimes may crash your system due to OOM (Out Of Retention) error if CSV size is more than your retention's size (RAM). The solution is improved by the adjacent importing way.

2. pandas.read_csv(chunksize)

Input : Read CSV file

Output : pandas dataframe

Instead of reading the whole CSV at one time, chunks of CSV are read into memory. The size of a chunk is specified using chunksize parameter which refers to the number of lines. This function returns an iterator to iterate through these chunks and and then wishfully processes them. Since simply a part of a large file is read at once, depression retention is plenty to fit the information. Later, these chunks can be concatenated in a single dataframe.

start = time.time()

#read data in chunks of ane meg rows at a time

clamper = pd.read_csv('huge_data.csv',chunksize=1000000)

end = time.fourth dimension()

print("Read csv with chunks: ",(end-start),"sec")

pd_df = pd.concat(chunk) Read csv with chunks: 0.013001203536987305 sec

This selection is faster and is all-time to use when y'all have limited RAM. Alternatively, a new python library, DASK can besides be used, described beneath.

DASK

Input : Read CSV file

Output : Dask dataframe

While reading large CSVs, yous may encounter out of memory error if it doesn't fit in your RAM, hence DASK comes into picture.

- Dask is an open-source python library with the features of parallelism and scalability in Python included by default in Anaconda distribution.

- Information technology extends its features off scalability and parallelism by reusing the existing Python libraries such as pandas, numpy or sklearn. This makes it comfy for those who are already familiar with these Python libraries.

- How to start with it? You tin can install via pip or conda. I would recommend conda because installing via pip may create some issues.

pip install dask Well, when I tried the above, it created some effect aftermath which was resolved using some GitHub link to externally add dask path as an environs variable. But why make a fuss when a simpler pick is available?

conda install dask - Lawmaking implementation:

from dask import dataframe as dd start = time.fourth dimension()

dask_df = dd.read_csv('huge_data.csv')

end = time.time()

print("Read csv with dask: ",(end-outset),"sec") Read csv with dask: 0.07900428771972656 sec

Dask seems to be the fastest in reading this large CSV without crashing or slowing down the computer. Wow! How proficient is that?!! A new Python library with modified existing ones to innovate scalability.

Why DASK is better than PANDAS?

- Pandas utilizes a single CPU core while Dask utilizes multiple CPU cores by internally chunking dataframe and procedure in parallel. In simple words, multiple small dataframes of a large dataframe got processed at a time wherein under pandas, operating a single big dataframe takes a long fourth dimension to run.

- DASK can handle large datasets on a single CPU exploiting its multiple cores or cluster of machines refers to distributed computing. Information technology provides a sort of scaled pandas and numpy libraries.

- Not merely dataframe, dask as well provides array and scikit-learn libraries to exploit parallelism.

Some of the DASK provided libraries shown below.

- Dask Arrays: parallel Numpy

- Dask Dataframes: parallel Pandas

- Dask ML: parallel Scikit-Acquire

We volition only concentrate on Dataframe as the other ii are out of telescopic. But, to get your hands dirty with those, this blog is best to consider.

How Dask manages to store data which is larger than the memory (RAM)?

When we import data, information technology is read into our RAM which highlights the memory constraint.

Let's say, you lot want to import 6 GB data in your 4 GB RAM. This can't be achieved via pandas since whole data in a single shot doesn't fit into retentivity but Dask can. How?

Dask instead of computing outset, create a graph of tasks which says near how to perform that task. Information technology believes in lazy computation which means that dask's task scheduler creating a graph at starting time followed by computing that graph when requested. To perform any computation, compute() is invoked explicitly which invokes task scheduler to process data making utilise of all cores and at final, combines the results into one.

It would not be difficult to sympathize for those who are already familiar with pandas.

Couldn't hold my learning curiosity, so happy to publish Dask for Python and Machine Learning with deeper study.

Conclusion

Reading~one GB CSV in the memory with diverse importing options can be assessed by the time taken to load in the memory.

pandas.read_csv is the worst when reading CSV of larger size than RAM'due south.

pandas.read_csv(chunksize) performs better than above and can be improved more past tweaking the chunksize.

dask.dataframe proved to be the fastest since information technology deals with parallel processing.

Hence, I would recommend to come out of your comfort zone of using pandas and attempt dask. But just FYI, I have only tested DASK for reading up large CSV but not the computations as we do in pandas.

Y'all tin can check my github lawmaking to access the notebook covering the coding part of this weblog.

References

- Dask latest documentation

- Book worth to read

- Other options for reading and writing into CSVs which are non inclused in this blog.

three. To make your hands dirty in DASK, should glance over the beneath link.

Feel free to follow this writer if you liked the blog because this writer assures to dorsum again with more than interesting ML/AI related stuff.

Thank you,

Happy Learning! 😄

Can go far affect via LinkedIn .

Source: https://medium.com/analytics-vidhya/optimized-ways-to-read-large-csvs-in-python-ab2b36a7914e

0 Response to "Read Large Binary File in Chunks Scipy Complex"

Post a Comment